Oragami - Sat, Jan 6, 2024

How Computers Think

This post is a continuation, adding depth to another post. As a refresher, I made the following claim:

> "[T]he model itself is just a collection of *numbers*. It is a very specific set of numbers, but nothing more than data nonetheless."

The “collection of numbers” are actually called “matrices” (no, not like The Matrix, like this), and they describe ways to “map” other numbers. Technically, they are just “lists,” or even “lists of lists” and so on, but they describe ways of transforming other lists of numbers. In simple terms, you can think of them as machines that take a location on a map and spit out a different location. A single matrix might be used to take a destination on a map, represented numerically, like longitude and latitude, and tell you what other location is 20 feet to the right (“translation”), twice as far from you in the same direction (“stretching”), or the same distance away but in the 5 o’clock direction (“rotation”). Neural networks also let you fold the map itself, using something called a “nonlinearity”, whose sequential folds can be used to crumble the whole map, such that an ant on the surface could walk across folds and find itself on the opposite end of the original map.

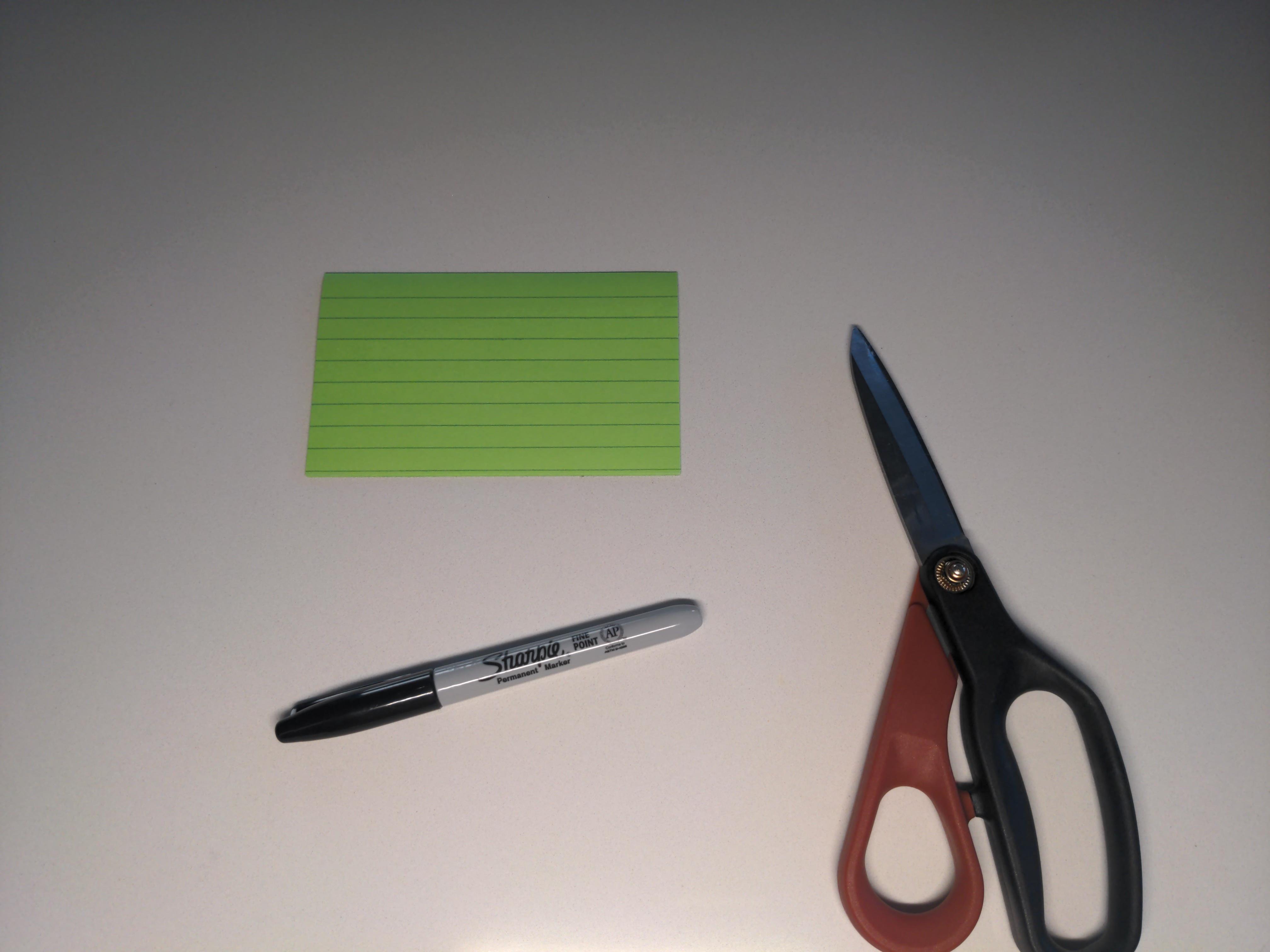

This might not seem particularly useful, until you consider applications. So, I’ll offer an example, not of a neural network but of a “physical” map that we want to separate by color. If you’d like to follow along, all you’ll need is a piece of lined paper, a pen, and a pair of (ideally very good) scissors.

If you don’t have these supplies handy, use your imagination. Otherwise…

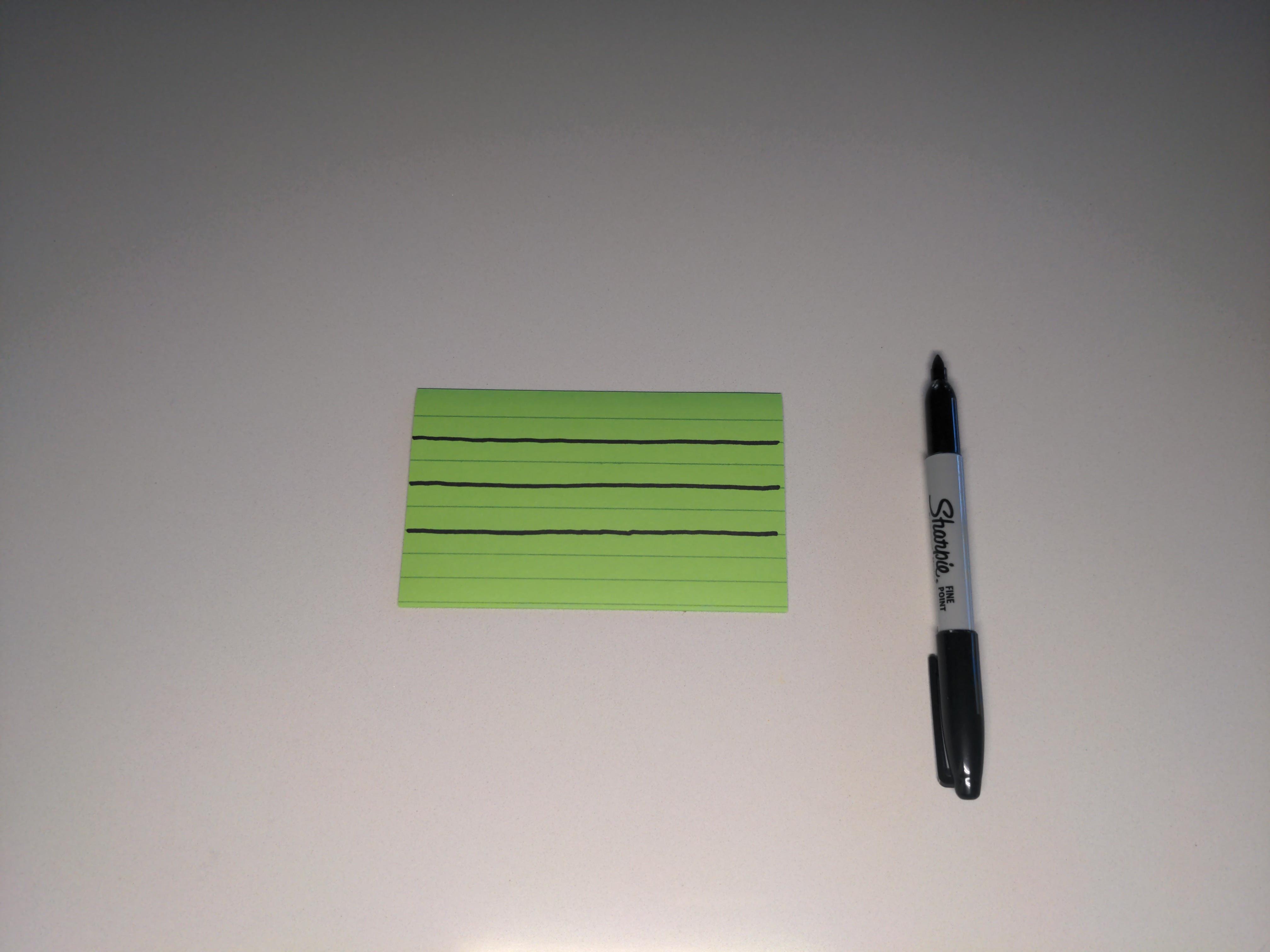

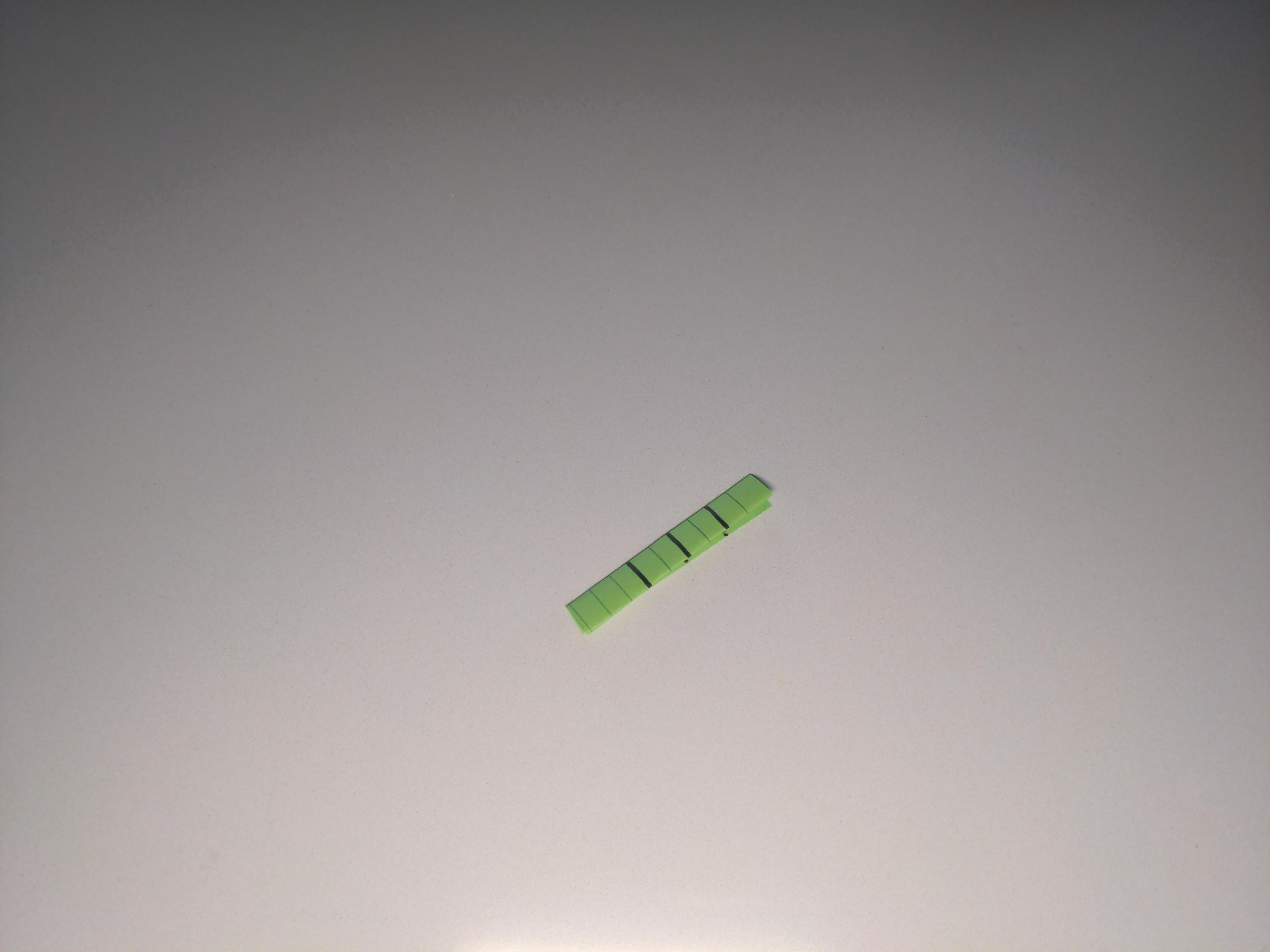

1. Begin by drawing a few parallel lines with the marker as below. It doesn't matter how many, but things will be much easier for you (without loss of descriptiveness) if you only draw two or three. Also, the further apart the better.

The goal will be to, with the least amount of cutting possible, separate all of the colored space from the uncolored space. In fact, we’ll aim to do this with a single (maximally small) cut. At first, this seems an impossible task, until you think about picking up the paper and deforming it.

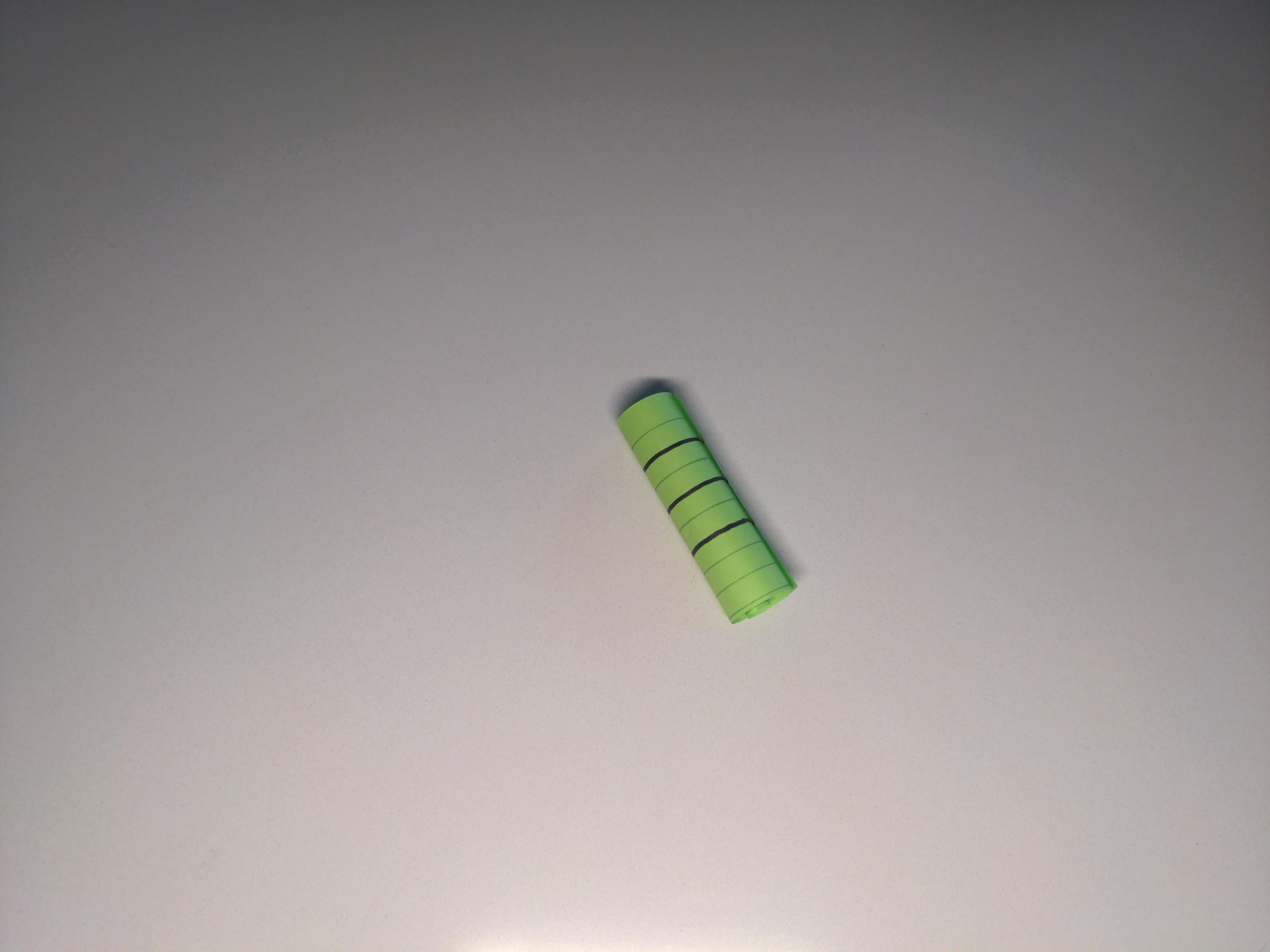

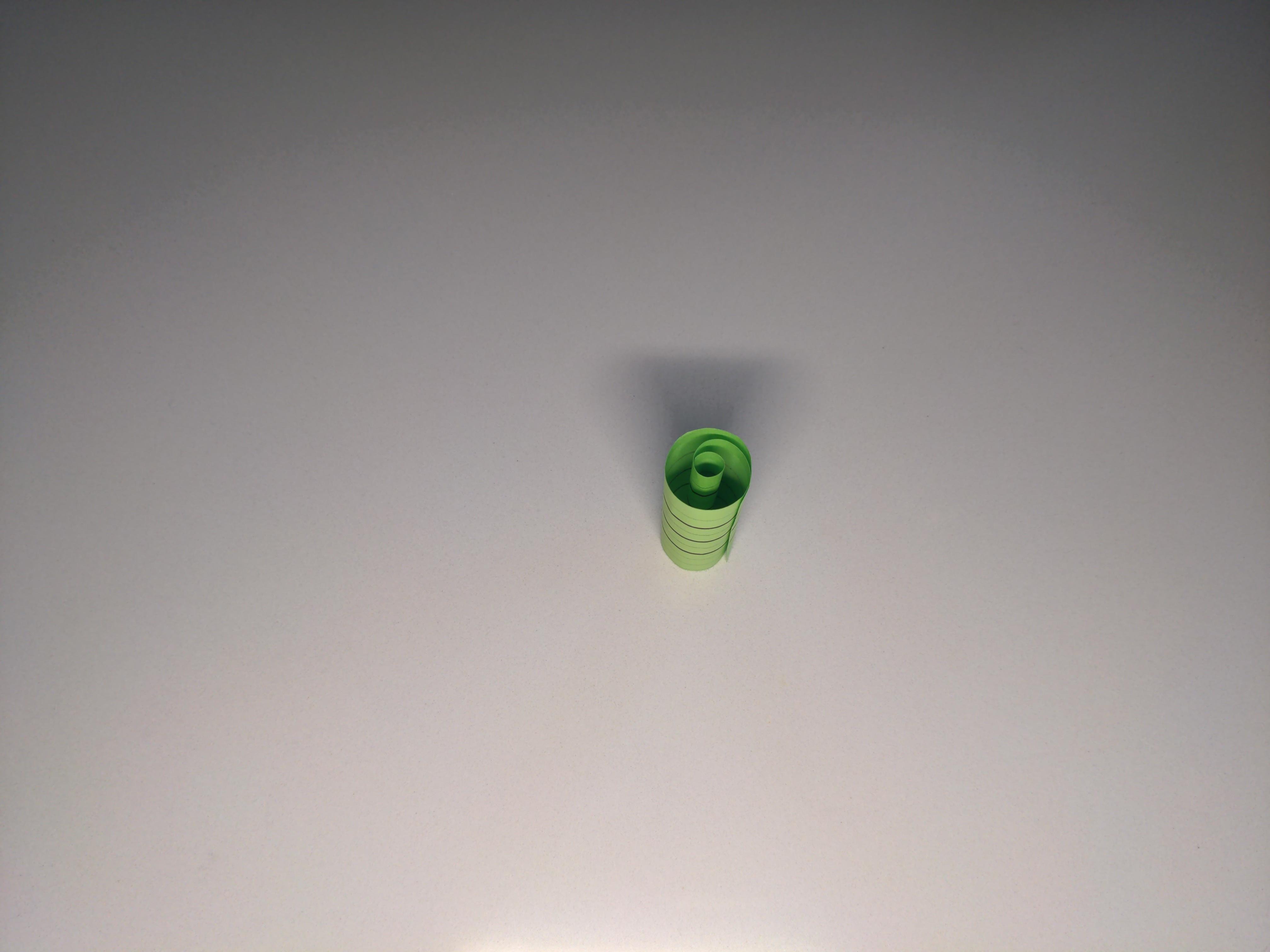

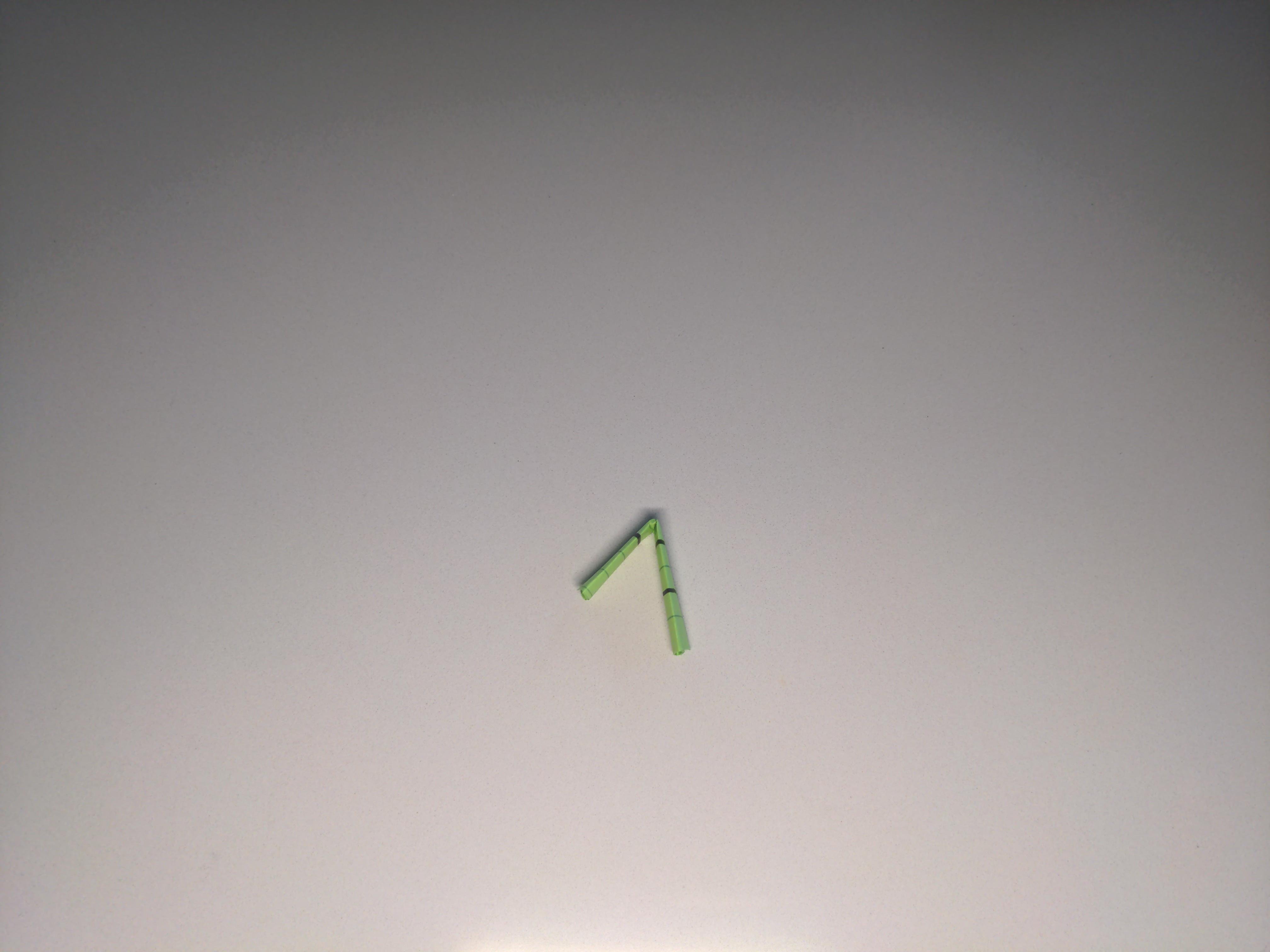

2. Roll the whole paper into a straw, along the stripes, as depicted below. The tighter the better.

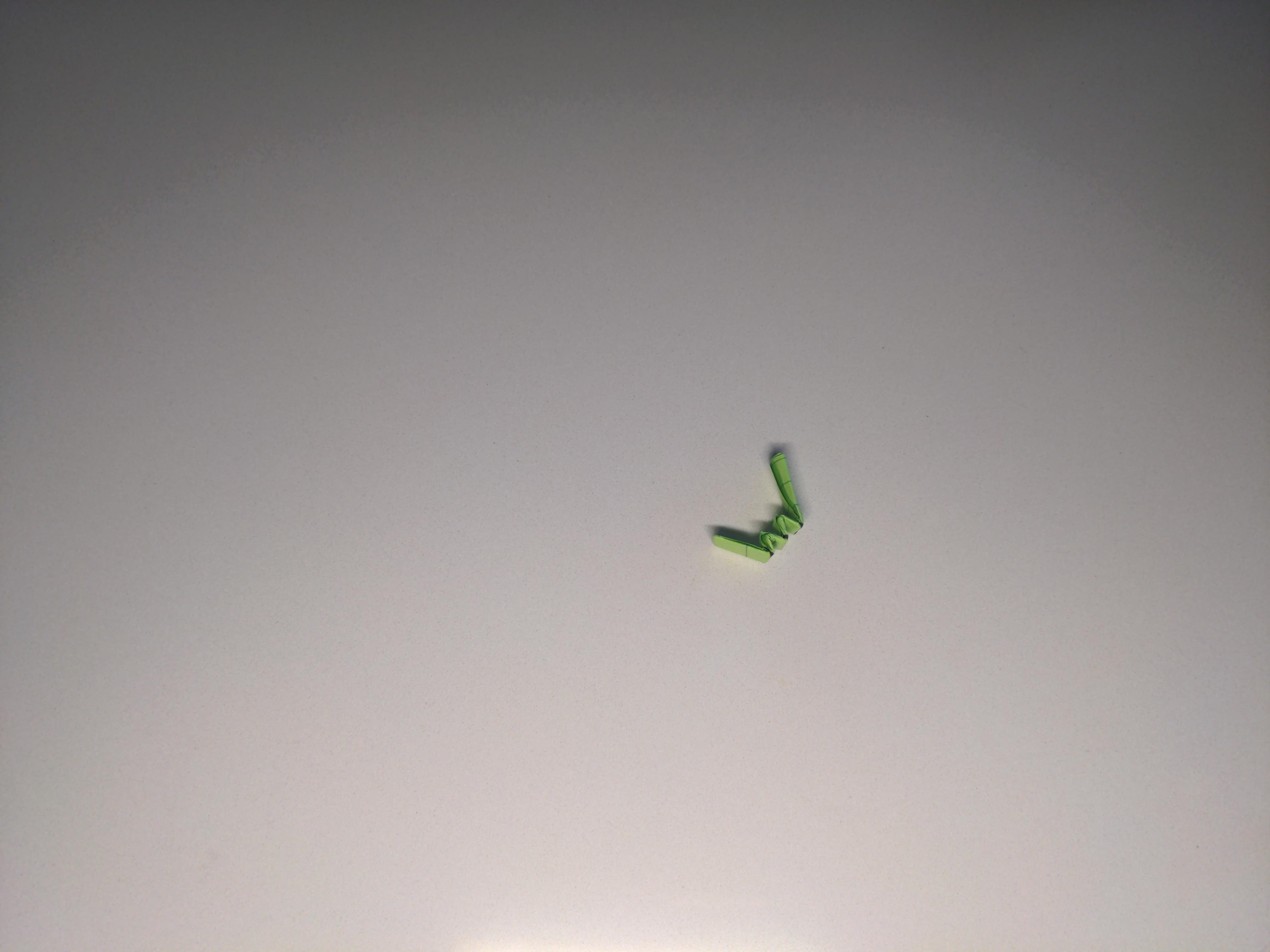

3. Next, for simplicity, we'll squash the roll flat on its side.

4. Now, we'll begin bending the strip like an accordion, such that each of the colored lined end up on a fold, touching one another.

5. At last, we use the scissors to make our single cut, just below the collection of colored folds.

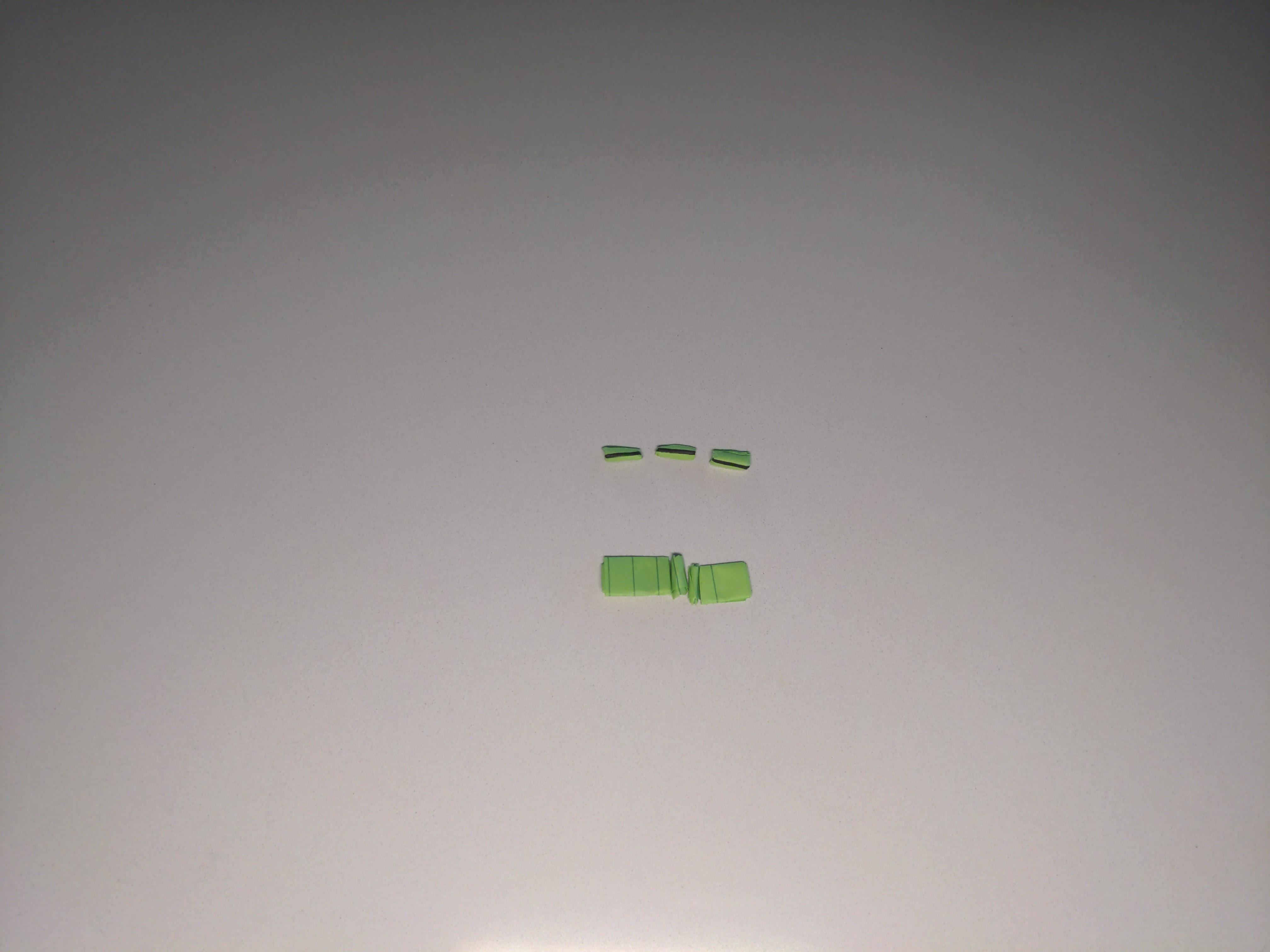

6. We're left with a few colored pieces and uncolored pieces (in my case, having drawn three lines, three and four, respectively).

7. Disregard the uncolored pieces.

8. Finally, we can unfurl the colored pieces to reveal the entirety of the original lines drawn in the first step.

This task would have been impossible, were it not for our ability to lift our 2D paper into 3D space and manipulate it therein.

So, what’s the point of this exercise, and how does it apply to machine learning or neural networks?

Classification

First, the cut made by the scissors can be thought of as making a statement about a boundary–in cutting, we are saying everything on one side of this cut is one thing, and everything on the other side is another thing. A cut, in this sense, and especially a single cut, represents the simplest possible way to divide two categories of thing. Two categories; one cut. On our original paper, this was impossible. To describe (let alone cut) the state of the original paper would require a lengthy description about parallel lines, running the full length of the paper, at regular intervals of such and such length. To describe the state of our completed oragami requires… “snip.”

In the world of AI/ML, we would call this a cutting process a “binary classification.” Especially in the field of computer vision, it is import to be able to separate things into groups across potentially fuzzy boundaries. In such cases, the map is a more abstract concept and in much higher dimension than two, but I’ll offer an example that keeps things in a very visualizable 2D.

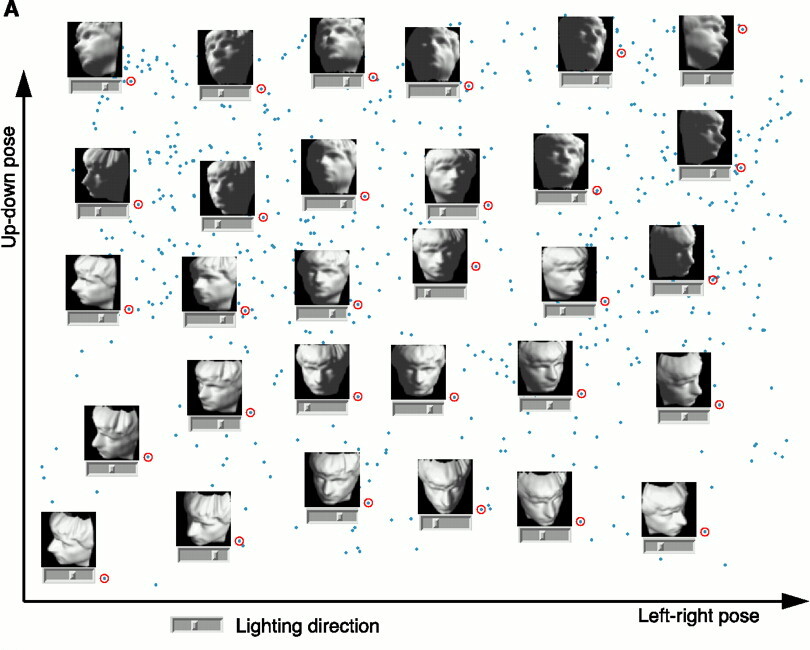

Consider a collection of photographs of a stone bust, varied along two features: looking left vs. right and looking up vs. down.1 The image below should help somewhat in visualizing this.

The plotted images should appear pretty sensibly placed: as you move left to right along a row of images, the bust appears to rotate, and, as you move up and down along a column of images, the camera appears to move up and down. However, these images were not plotted by a human, but by a computer. Without going into the actual details of how that was performed, I only want you to use this as an example of a more abstract notion of a map. The axes measure things like angle of camera and rotation of subject. Now, imagine you are a computer program that needs to rank images of cats and dogs by how confident you are they are cat or a dog. Basically, what I’m asking you to do is to define a single axis, where (arbitrarily defined) leftness corresponds to cat-likeness and rightness corresponds to dog-likeness, such that the subject species of images in the middle is difficult to discern. This would be something like one of those creepy “Animorphs” book covers:

Here’s a similarly creepy but more apt example I generated with Dalle:

One way you might approach this task would be to define a set of features that make something a dog, and then add up how many an image has. For example, whiskers are a good sign something that can only either be a cat or a dog is a cat, but a cat without whiskers isn’t necessarily a dog, so you need more than that. A good example might be morphological features, like jowl prominence and overall size. None of these features is sufficient, but they offer good hints in aggregate, at least statistically. E.g. a 40-pound animal that has jowls and no whiskers is much more likely to be a dog than a cat, so it gets, like, three points: no whiskers, yes jowls, over 20 pounds. A cat might get zero points for having whiskers, no jowls, and being under 20 pounds. In this case, score is the single axis along which something is defined to be either a dog or a cat.

Armed with this score, we can make our metaphorical “cut.”

Dimensionality Reduction

But what we’ve really done is taken something three-dimensional–whiskers, jowls, and weight–and flattened then squeezed it into a single dimension–score. Just like the oragami, but starting in 3D! The images of animals, our data, can be plotted as a cloud of dots (points) in 3D, each dot representing a single image by its three recorded features, just like the plot of stone bust photos (in 2D with two recorded features). Even though we lack the mental hardware to visualize a physical analog to higher-than-three-dimensional space, computers can perform mathematical oragami all the same.

In another sense, what we’ve done with our oragami is to arrange our data in a more sensible fashion for the performance of a particular task. Further, we’ve done so in a repeatable fashion, such that a new data point could be added to the 3D point cloud and, after repeating the same sequence of flattening and squeezing then cutting, it would end up on (hopefully) the correct side. Thus, any point in the original space of any number of dimensions can be mapped onto a single line (one dimension) by some sequence of “transformations” (stretches, squeezes, flattenings, rotations, and folds).

If all this talk of stretching and rotating and folding makes you hungry (???), you’ll love my final analogy…

Good pasta dough, made from scratch and by hand, lives life like a three-dimensional point cloud fed into a neural net. Read that again.

If every “molecule” of pasta is thought of as a point, then a ball of dough is a three-dimensional point cloud. As shown in the video, the preparation of the pasta dough–the kneading–involves repetitive sequences of squishing, stretching, rotating, and folding. press, fold, rotate… press, fold, rotate…

Now, if you imagine tossing a small bead into the dough. As the dough undergoes a sequence of these kneading operation, the bead will travel around the ball of dough. This is exactly what happens to a new cat photo in our neural net, only with the caveat that, if it is a good neural net, all of the “cat-like” points will end up on the same part of the dough.2

How Computers Learn

So, how exactly do we end up with a good neural net?

Because the specific steps of the oragami are not known in advance and are impractical to intelligently design, neural nets perform a sort of evolutionary process to just keep trying random stuff until something starts to work, and then do more of it.

This means trying a lot of stuff, but eventually, with enough compute, it basically tends to work very well, permitting that there is indeed something, like a pattern, to learn in the first place.

Stay Tuned: More Content Coming Soon

-

This example actually appears in Science and is from a paper called A Global Geometric Framework for Nonlinear Dimensionality Reduction by Tenebaum et al (2000). ↩︎

-

Note that, in this example, the dimension of the data (analogized by the pasta ball) is not actually reduced–at least not necessarily. The “kneading” operations merely move things around. ↩︎

Free Consultation for New Clients

The AI revolution underway **right now** is changing the world. We're here to help you understand it and keep up. If you'd like to learn more about what we do, we'd like to meet you.

Get in touch with us today to find out how we can help your business grow.